Contrary to popular opinion, Apple appears to be ahead in AI — and in some cases seems far in front of the competition. The revelation comes from an Apple white paper that hasn’t gotten much attention, but should.

A white paper on Apple’s Foundation Model, the company’s homegrown LLM (large-language model) that powers Apple Intelligence, reveals two important facts: it’s the safest in design and highly competitive with both Meta’s Llama and OpenAI’s GPT-4. This seems to debunk a big myth about Apple’s AI efforts: that the company’s privacy-first philosophy would hold it back.

The Apple Foundation Model is just as capable in tests of writing and summarization compared to the top LLMs by OpenAI, Meta, Mistral AI and others. And thanks to Apple’s strict guidelines for expunging harmful content, human-evaluated tests repeatedly rank its foundation model as the safest above all the rest — by a wide margin.

It looks like Apple Intelligence could be off to a good start.

Apple Intelligence: Both safe and savvy

Image: Apple

Earlier this year, scores of headlines claimed Apple was losing the AI race because it did not have its own LLM. Apple itself flamed the controversy by showing Siri integrated with ChatGPT at the annual WWDC programmers’ conference. This led implied that OpenAI was powering all of Apple Intelligence, which isn’t the case.

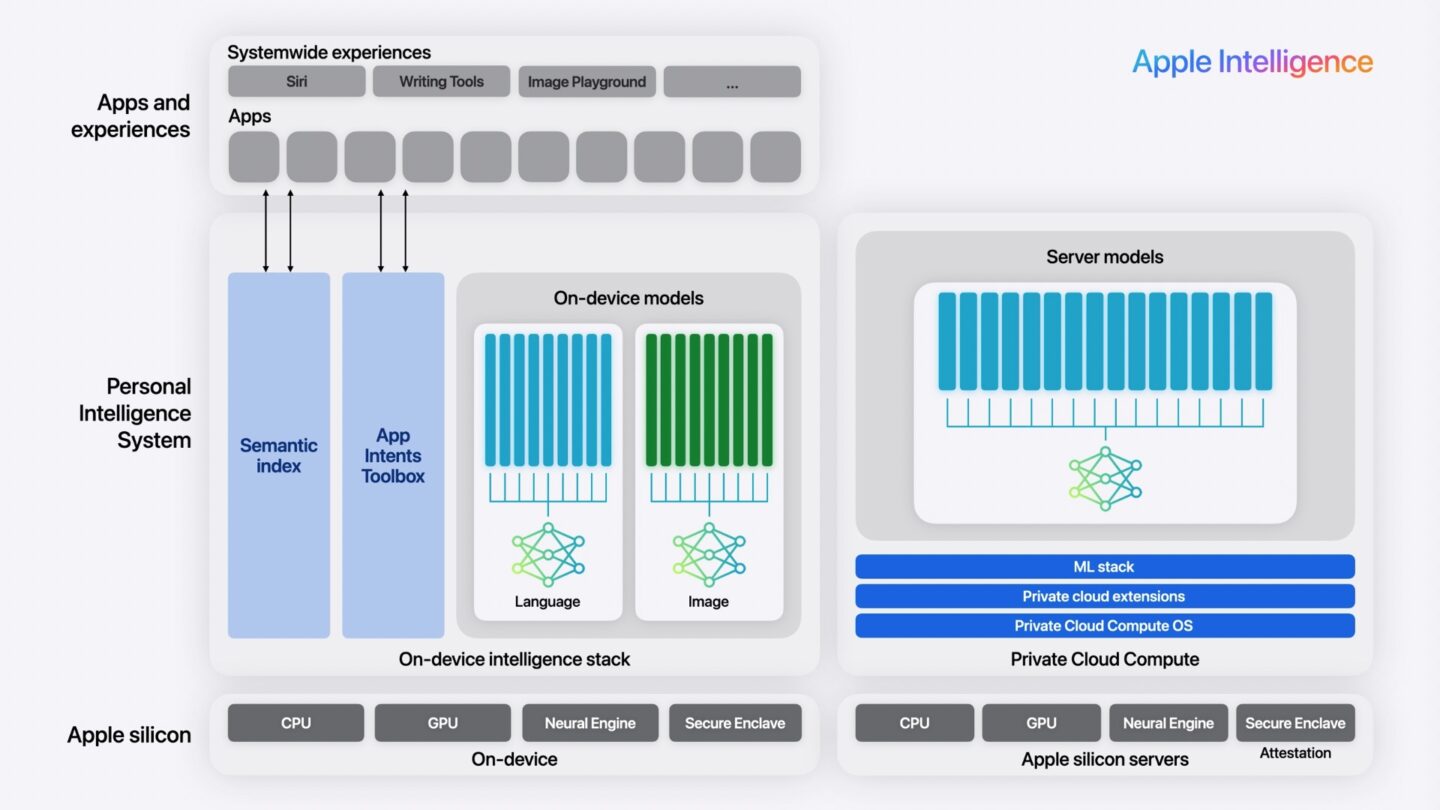

Apple Intelligence is a broad marketing term that brands a bunch of new AI features. It will be available on every major software platform — iOS, iPadOS and macOS. Apple announced the first features at WWDC24: a smarter, more capable Siri; writing tools that generate and summarize text; image generation in Messages and other apps.

Powering all of these features is the Apple Foundation model (AFM). The Foundation model is to Apple Intelligence what GPT-4 is to ChatGPT, Whisper and other AI services. That means the capability and prowess of the Foundation model will be directly related to how well all Apple Intelligence features work.

An academic white paper authored by over 150 Apple employees outlines in great detail the training, performance and evaluation of the Foundation model.

Apple Intelligence is smarter than you think

Image: Apple

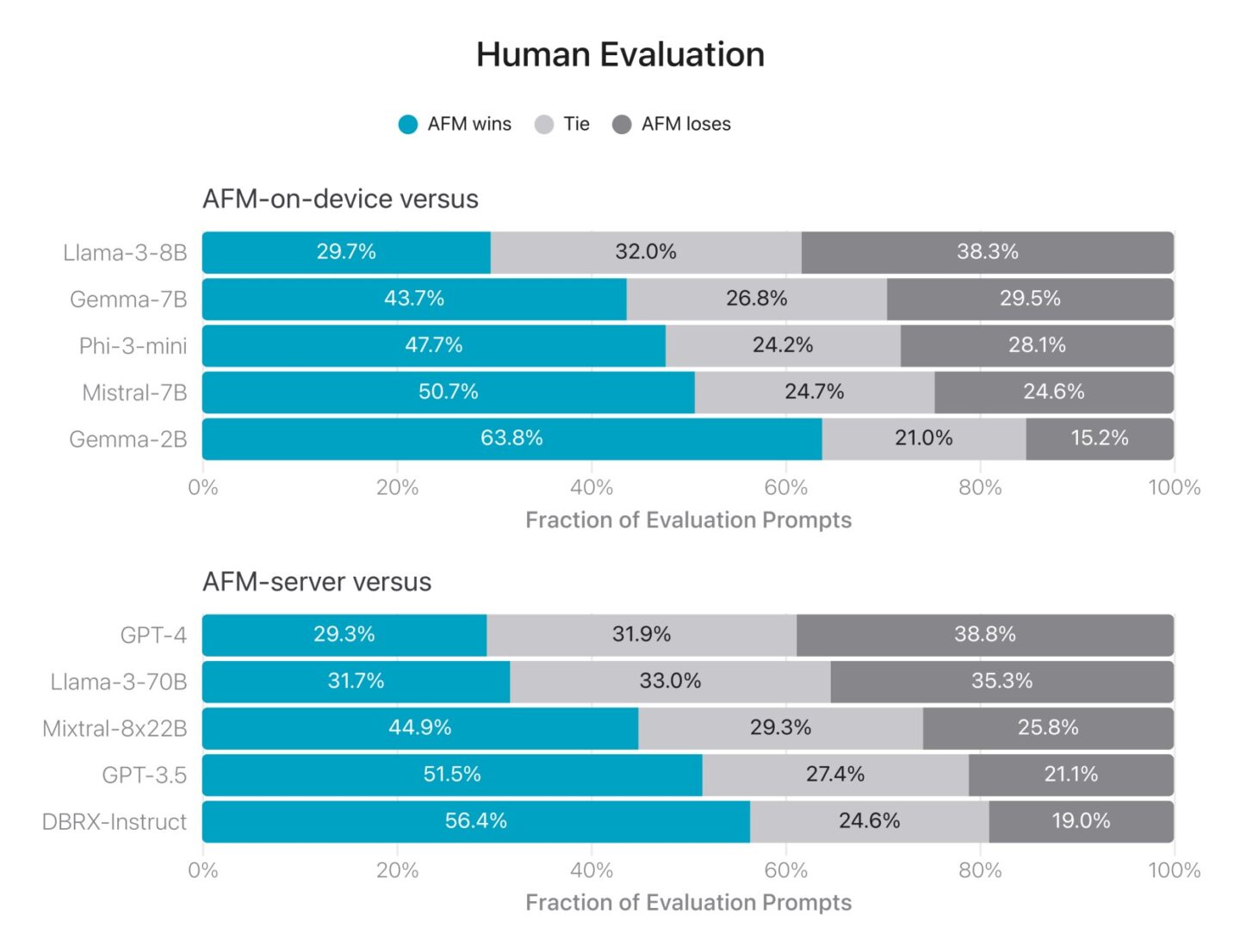

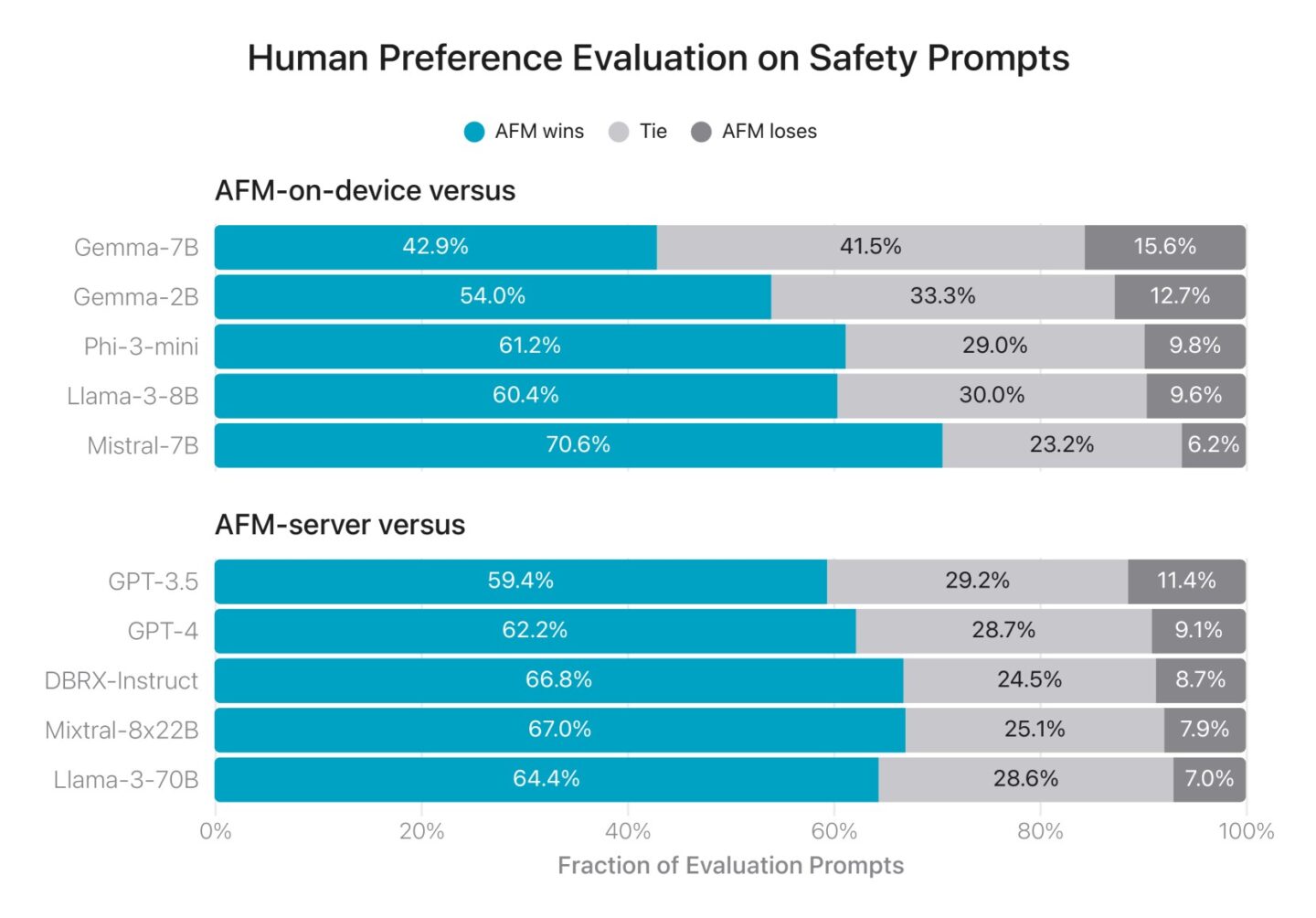

In a human evaluation test, 1,393 prompts are fed to the Apple Foundation model and other competing models. It’s tested separately against the top open-source LLMs that run on-device and commercial LLMs running in the cloud.

In each domain, on-device and in the cloud, the results are similar. Against the latest and greatest, Llama-3 and GPT-4, Apple comes out slightly behind. Stacked up with the rest, it’s a tight race. Apple Intelligence beats out Mistral and GPT-3.5 over 50% of the time.

Image: Apple

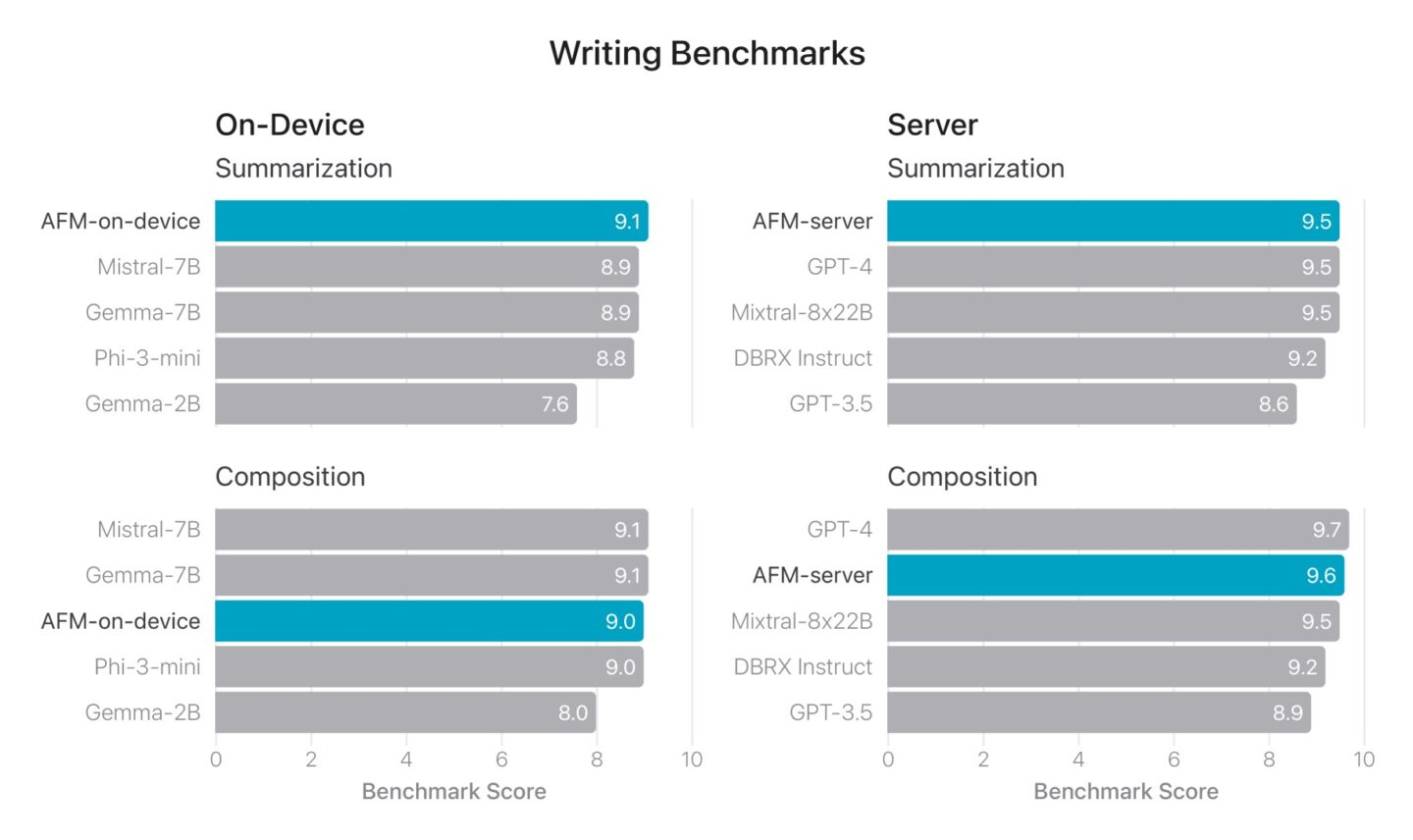

Additional benchmarks show even greater results. Apple Intelligence is equally as capable at text summarization, both on-device and in the cloud, with two narrow first-place spots. Text generation and composition, widely considered to be the bread and butter of OpenAI’s ChatGPT, has only the slightest lead over Apple’s own.

Image: Apple

More responsible and safe AI

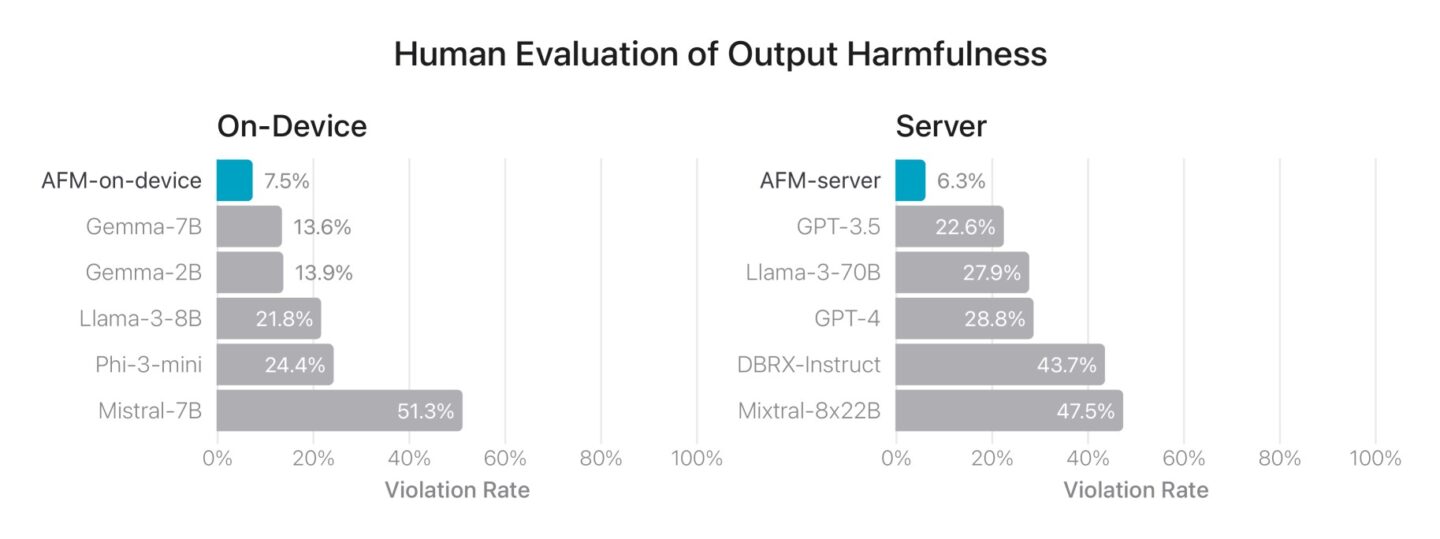

When it comes to generating content that isn’t discriminatory, hateful, exclusionary, harmful, sexual, illegal or violent, the Apple Foundation model is the safest by a huge margin. In a human evaluation test, AFM-on-device produced harmful content nearly half as frequently as the next best, and roughly a third as frequently as Meta’s Llama-3. AFM-server fared even better, scoring over 4.5× better than GPT-4.

Image: Apple

In nine out of ten human preference tests, output from the Apple Foundation model was deemed safer over 50% of the time. In all ten tests, it tied at least 23% of the time.

Apple rigorously culled harmful content from its training data. According to the white paper, input data goes through “extensive quality filtering” for safety and profanity, “using heuristics and model-based classifiers.” Sanitizing the training data has a huge impact on the model’s output — it can’t replicate what it hasn’t been shown.

Other players in the field have been heavily criticized for training their AIs on YouTube videos, everything on Reddit, and everything on the web — basically, everything they can get their hands on. Apple is not entirely without criticism here as the company only revealed its Applebot-Extended web scraper tool after it had already been used to scrape the web. On the consumer side, however, users can trust the Apple Intelligence writing tools more than others.

Taking action

Image: Apple

The latest beta versions of iOS 18.1 and macOS Sequoia 15.1 only have the writing and image editing tools, but much more is to come. In a future release, Siri will be able to understand the apps on your phone, take plain language commands, and execute them on your behalf.

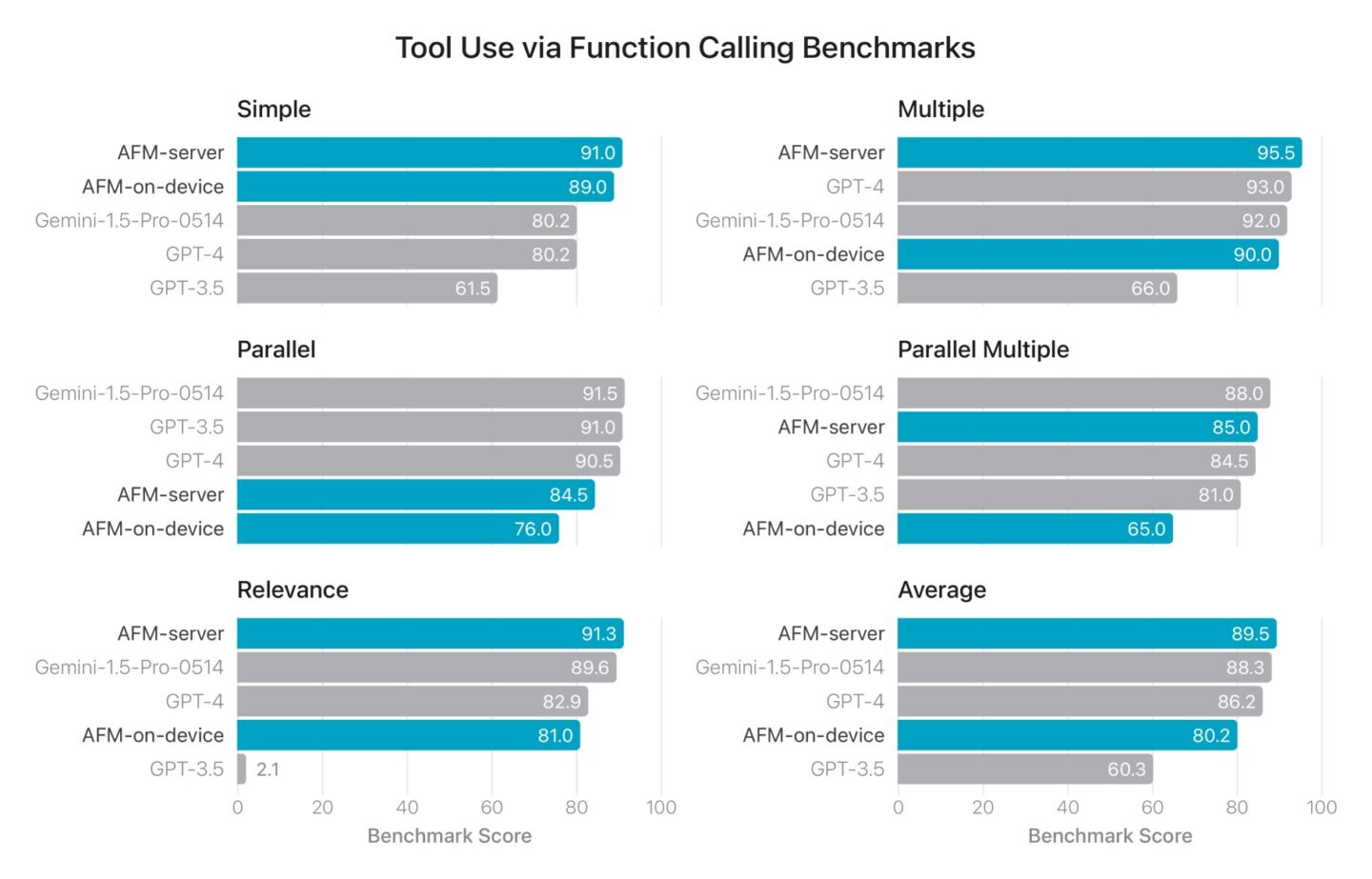

A litmus test for how well this feature will work can be seen in a tool use test, where “given a user request and a list of potential tools with descriptions, the model can choose to issue tool calls” in a format the OS can understand. Unlike other benchmarks, AFM-on-device is so good at this that it’s still competitive when put up against other server-based LLMs. Average performance of AFM-server is best-in-class.

Other tests outlined in the paper show the Foundation model is best-in-class in tool use and following instructions as well.

Apple isn’t lagging in the AI race after all

The received wisdom is that Apple is years behind in the AI race, thanks mostly to not having its own LLM.

In fact, Apple Intelligence is built on a solid foundation: the Foundation model. According to the research, it’s just as powerful and far safer. Apple’s no AI laggard at all.